Project Background

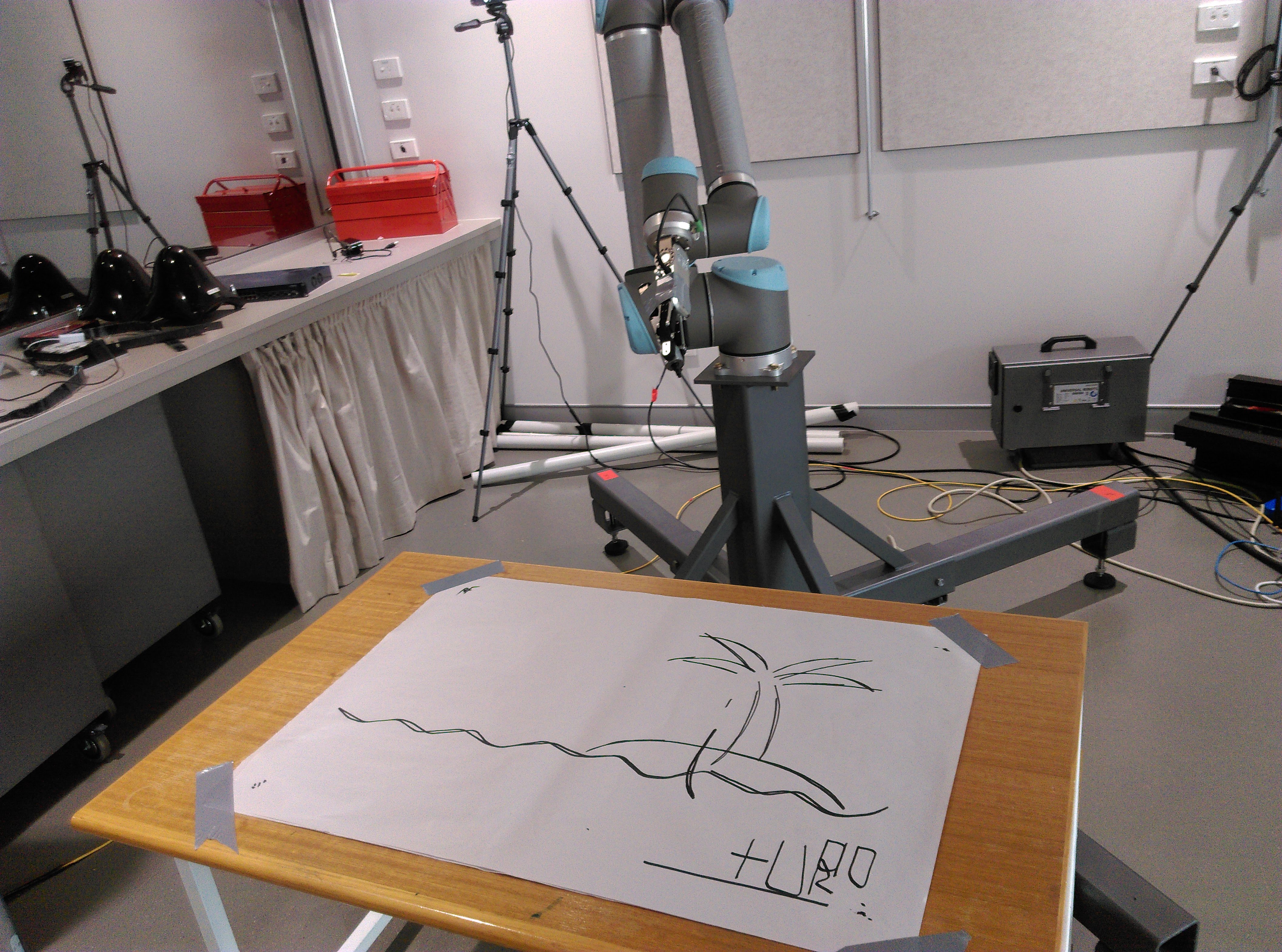

Before officially starting my doctorate, I worked with a professor at the University of Aalborg and my PhD supervisor on a project to program a robotic art exhibit. This exhibit was set up at the Questacon—National Science and Technology Centre museum. It featured an interactive experience with a UR10 robot. The robot would collaborate with participants by drawing sketches, creating an engaging and unique experience. The robot held a whiteboard marker and was positioned in front of a table with a blank canvas. It would partially draw a sketch and encourage a human participant to contribute to the canvas through gestures. This back-and-forth process continued for several iterations until the robot signed its name on the canvas and then signalled for the human to sign their name, concluding the interaction. The image below shows our initial lab setup at the University of Canberra after one of the drawing tests.

The drawing robot during development in our lab.

This was my first robotics project at the University, where I took a prominent role in a practical engineering context. My primary contributions to this project were the motion planning programming and development of interfaces that allowed HRI (Human-Robot Interactions) researchers to intuitively develop understandable gestures on the robotic arm. In addition, we had to ensure that the robot could safely draw diagrams on the level surface without straining the robot arm while performing this sliding/colliding motion. In addition, alongside another technical researcher and two HRI researchers from Denmark, we deployed the exhibit to an in-the-wild setting at Questacon while collecting data for a human-centric study. Overall, this project was considered quite a success, resulting in several publications, awards, and a redeployment in Denmark, as further outlined in this article.

Technical Development

Developing this exhibit involved taking in image template files, which were binary images of the sketches that the robot would draw. Contours were extracted from the template images via the OpenCV library alongside the Ramer-Douglas-Peucker algorithm [Douglas 73]. They converted these line paths into trajectories for the robot’s tool centre point (TCP), allowing it to replicate key lines and features from the images onto the canvas placed in front of it. This setup, designed for flexible modification, allowed the HRI collaborators to create and improve the template image files without modifying the code base. For the drawing component, a whiteboard marked encased in a steel box with a spring (for compliance) was attached to the robot’s gripper components, ensuring the marker collided with the table/canvas surface safely.

Our HRI collaborators played a crucial role in the development process, as they not only needed the robot to draw images but also required a range of hardcoded gestures to communicate with participants in the collaborative drawing task. To meet this requirement, a recording module was developed that allowed them to move the robot to desired configurations, record these positions, set motion parameters (e.g., speed and pauses), and interactively define a series of gestures. This framework saved these configurations to a CSV file, which was read by Python code and played back these gestures during operation. The Python-URX library was used to control the UR10 for all motions.

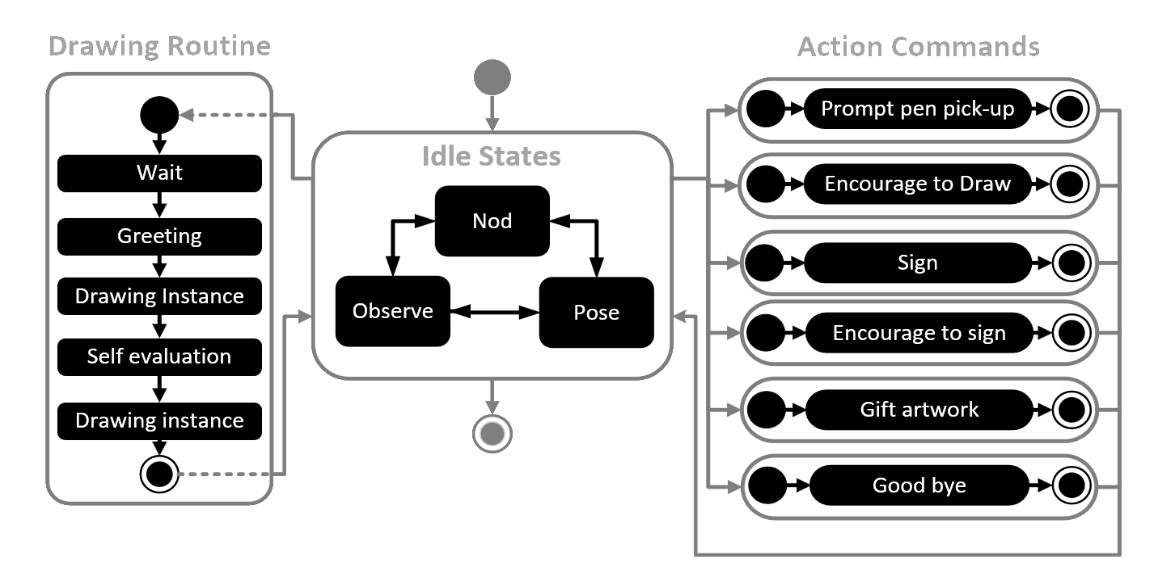

The WOZ framework to operate the robot which makes it appear socially interactive.

Lastly, the final module to develop was the WOZ (Wizard of Oz) command framework (shown above), which allowed our HRI colleagues to control the robotic exhibit during operations for broad phases of the interaction. As the name implies, this framework makes the robot appear intelligent and interactive while having a human operator ‘behind the curtain’, which provides this illusion. With its human-controlled nature, the command framework allows the human operators to execute drawing motions, idly move, and communicate to the participant via gestures in real-time. Thereby giving a reactive and intelligent appearance to the human participant.

A Public Deployment and Paper Publication

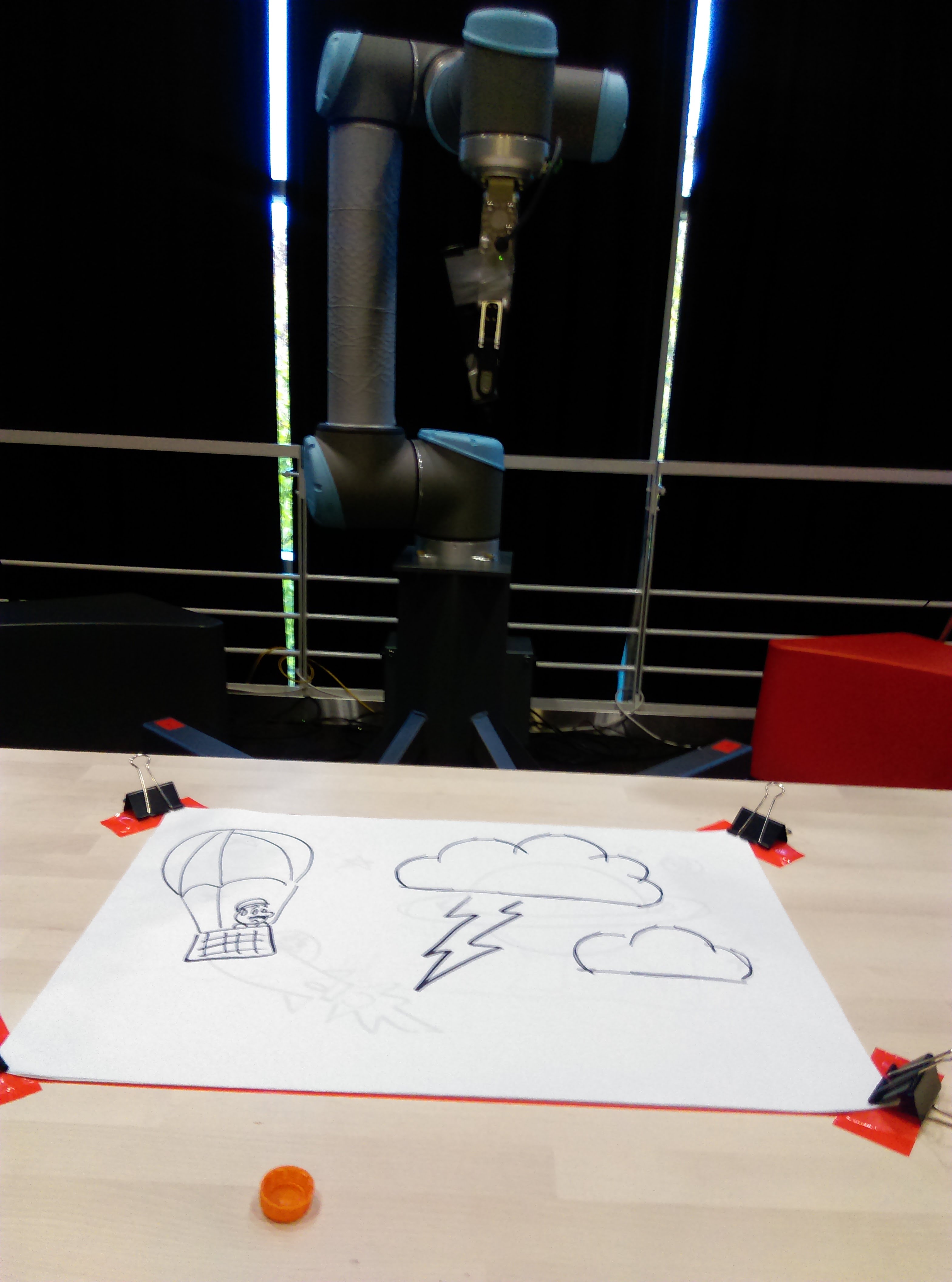

After completing the technical work with collaborators, we then began the deployment process to install this robotic exhibit at Questacon. Generally, the setup was smooth, as we developed a calibration routine for the position of the canvas surface and ensured the project could be easily relocated. The exhibit remained at Questacon for over a week, during which our colleagues collected data for their master’s degrees (which resulted in their own publication [Pedersen 20]). The image below shows the robot during its public deployment.

The exhibit at Questacon.

During and after the project deployment, my technical collaborators and I wrote up and published an article at the international conference on Social Robotics (ICSR) [Hinwood 18]. The published work broadly described the information outlined in this post, albeit more in-depth, and argued for using WOZ frameworks to establish a benchmark in human response to robotic behaviour. In addition to being my first publication, where I was the lead author, this paper also received the award for best interactive session at ICSR 2018 (see accolades).

Project Deployment in Denmark

Given the award received at ICSR, I was invited to recreate the exhibit in Denmark at the University of Aalborg. I was there for three months, where I recreated the initial exhibit on the more miniature UR3 robot. An early validation test of the UR3 performing drawing motions can be seen in the video below. This first recreation was deployed at the Danish Museum of Science and Technology. In addition, after I’d returned to Australia, researchers further expanded upon this work with an artist [Gomez 21]. I’m proud to have contributed to this rewarding and recognised project.

References

[Douglas 73] Douglas, David H., and Thomas K. Peucker. “Algorithms for the reduction of the number of points required to represent a digitized line or its caricature.” Cartographica: the international journal for geographic information and geovisualization 10.2 (1973): 112-122.

[Gomez 21] Gomez Cubero, Carlos, et al. “The robot is present: Creative approaches for artistic expression with robots.” Frontiers in Robotics and AI 8 (2021): 662249.

[Hinwood 18] Hinwood, David, et al. “A proposed wizard of OZ architecture for a human-robot collaborative drawing task.” International Conference on Social Robotics. Cham: Springer International Publishing, 2018.

[Pedersen 20] Pedersen, Jonas E., et al. “I like the way you move: A mixed-methods approach for studying the effects of robot motion on collaborative human robot interaction.” Social Robotics: 12th International Conference, ICSR 2020, Golden, CO, USA, November 14–18, 2020, Proceedings 12. Springer International Publishing, 2020.